Domain Specific Word Embeddings

Find read and cite all the research you.

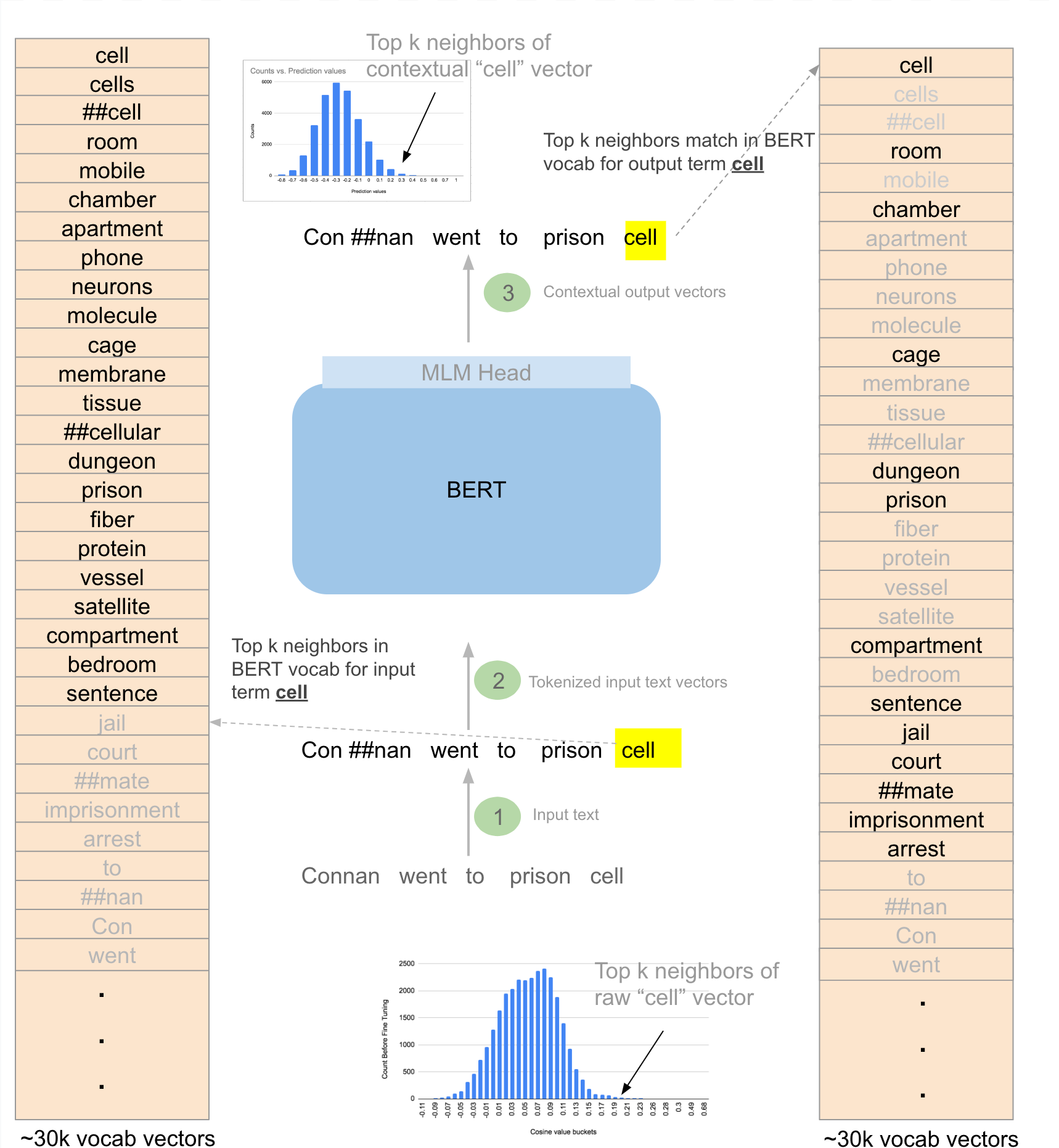

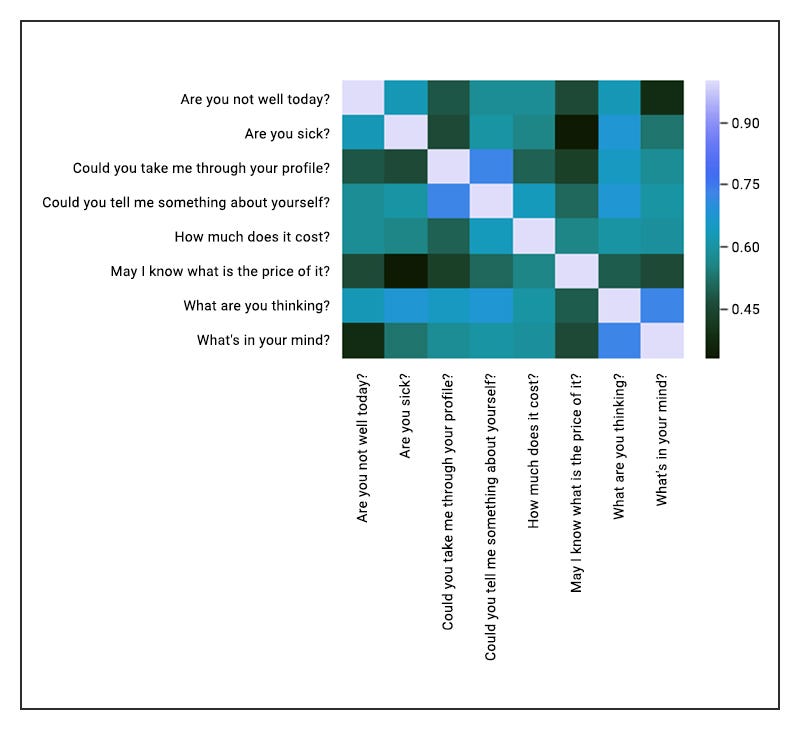

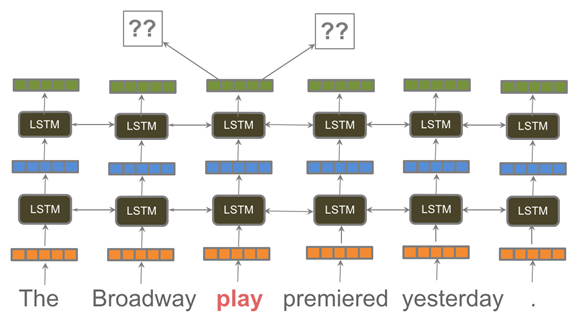

Domain specific word embeddings. To account for this language use the authors present domain specific pre trained word embeddings for the patent domain. To examine the novelty of an application it can then be compared to previously granted patents in the same class. To this end we propose a deep learning approach based on gated recurrent units for automatic patent classification built on the trained word embeddings. We train our model on a very large dataset of more than 5 million patents and evaluate it at the task of patent classification.

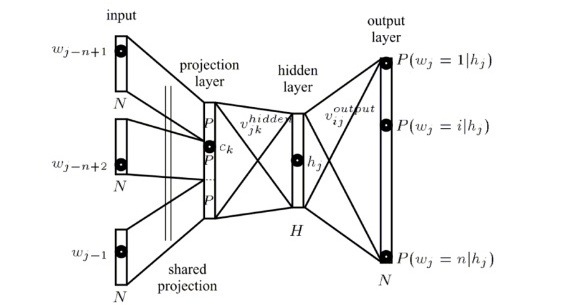

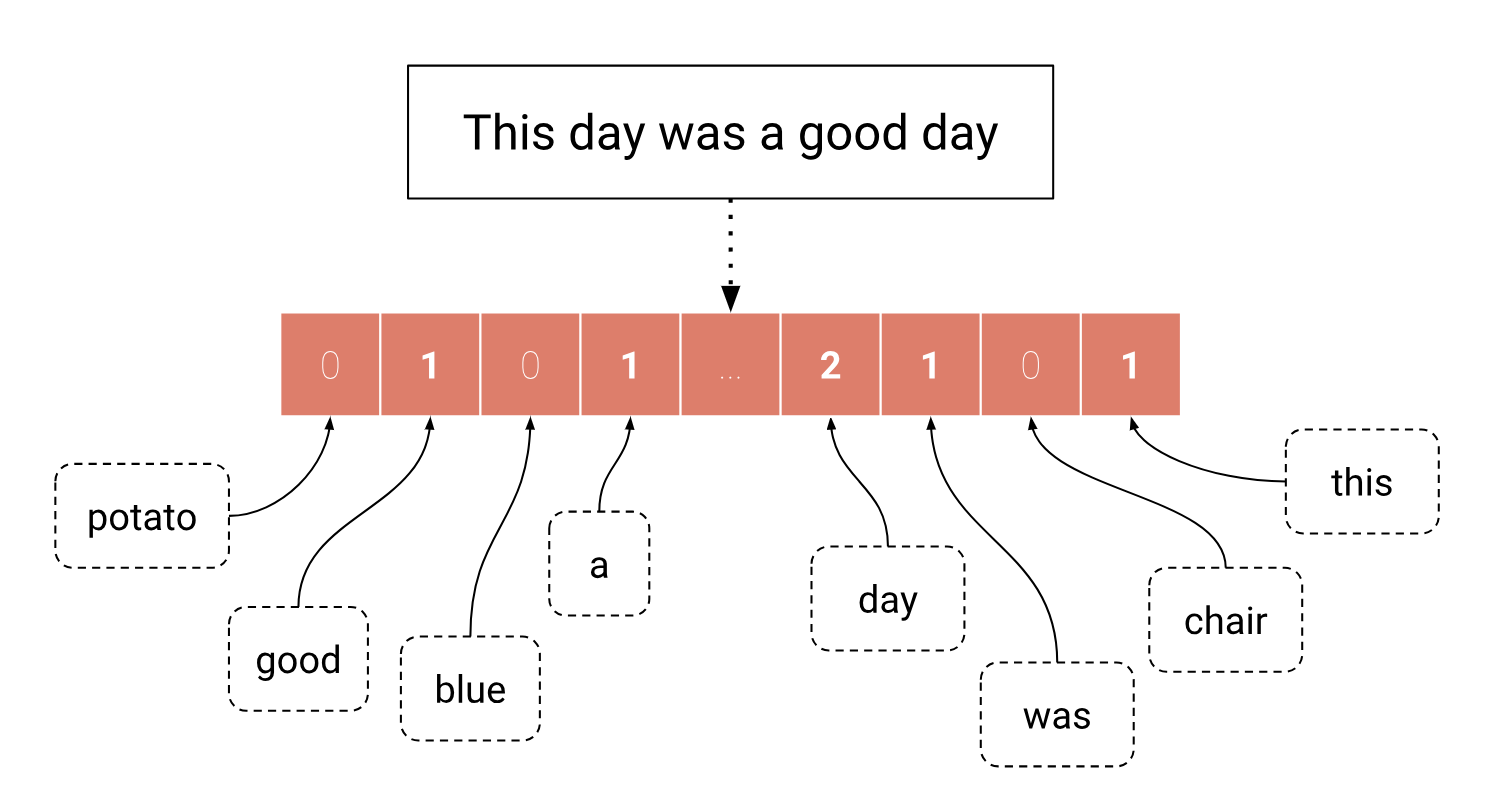

Using pre trained google s word embeddings google word2vec using pre trained biomedical word embeddings from open access subset from medline database pubmed embeddings. Firstly we find that the performance of general pre trained embeddings is lacking in the biomedical domain. 7 3 2 the proposed biomedical word embeddings. 09 21 17 word embedding is a natural language processing nlp technique that automatically maps words from a vocabulary to vectors of real.

Domain specific word embeddings for patent classification abstract patent offices and other stakeholders in the patent domain need to classify patent applications according to a standardized classification scheme. Finally we develop new biomedical word embeddings and provide them as publicly available for use by others. This depends upon the domain that you want to use the word embeddings for and the size of your training data for example for the biomedical classification task that i had at hand i tried 3 ways. The authors train the model on a very large data set of more than 5m patents and evaluate it at the task of patent classification.

Trained word embeddings for the patent domain. This is an implementation like dis2vec which can be used to train words for a specific domain to produce better results than using word2vec. Secondly we provide key insights that should be considered when working with word embeddings for any semantic task. Pdf patent offices and other stakeholders in the patent domain need to classify patent applications according to a standardized classification scheme.